Last updated: 8 September, 2025

For decades, the idea of machines that can think, reason, and learn like humans has fascinated scientists, philosophers, and science fiction writers alike. Today, we are closer than ever to that vision — yet still far from truly realizing it.

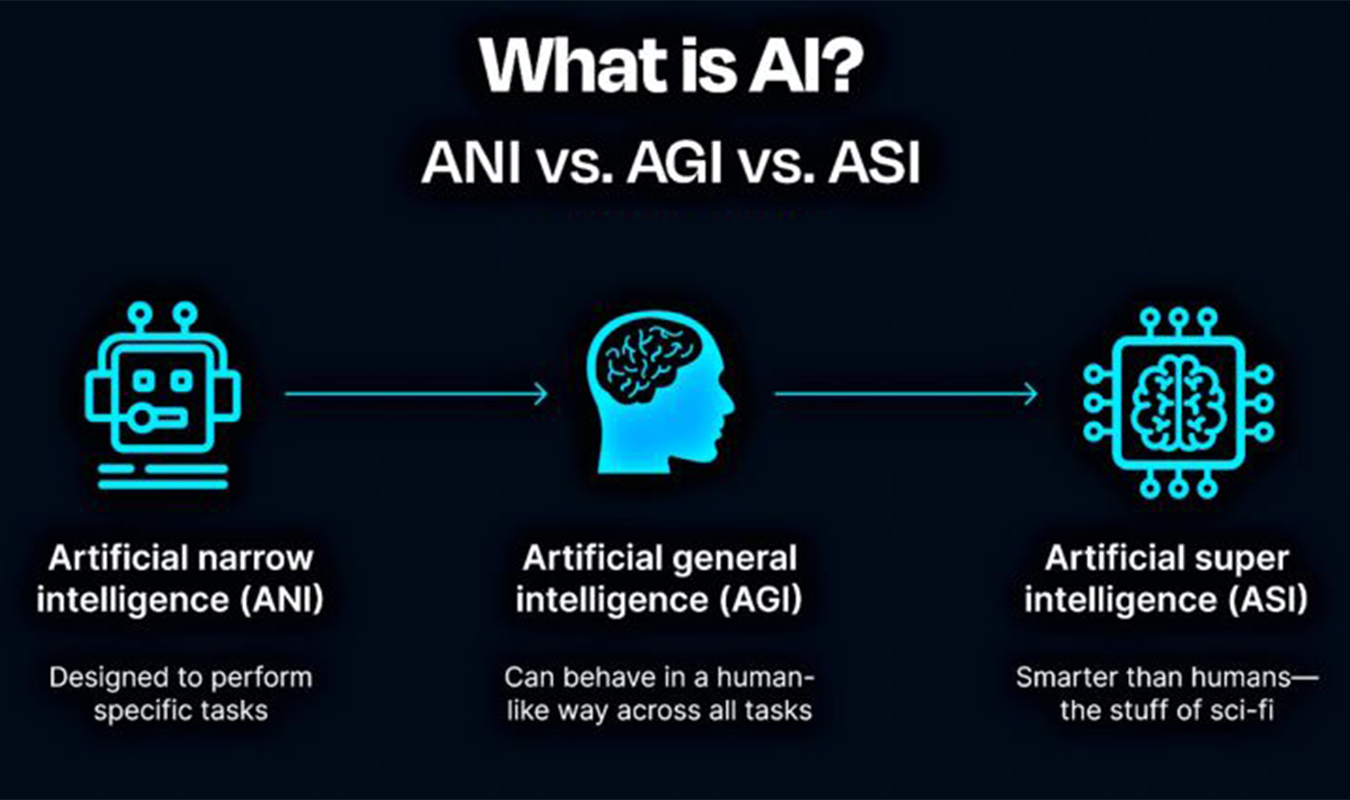

The term Artificial General Intelligence (AGI) represents the next great frontier of artificial intelligence: systems capable of understanding, learning, and applying knowledge across diverse domains, matching or even surpassing human cognitive ability.

While today's AI can write, draw, and even code, it remains narrow — brilliant within its defined boundaries, but helpless outside them. The leap from narrow AI to general AI is not just a technological challenge — it's a philosophical, ethical, and existential one.

This in-depth guide explores what AGI really means, how researchers are pursuing it, and what the journey to superintelligence might look like — step by step.

What Is Artificial General Intelligence?

Artificial General Intelligence (AGI) refers to a machine's ability to understand, learn, and apply knowledge across a wide range of tasks at or beyond human capability.

Unlike today's narrow AI systems — trained for specific applications like image recognition, text generation, or translation — an AGI could:

- Learn new tasks without explicit retraining.

- Transfer knowledge from one domain to another.

- Reason abstractly and plan over long time horizons.

- Exhibit creativity, self-reflection, and adaptation.

In essence, AGI wouldn't just perform tasks — it would understand them.

"AGI is the point at which machines possess the flexible intelligence that characterizes human cognition."

— Nick Bostrom, Author of SuperintelligenceFrom Narrow AI to AGI: The Evolution of Intelligence

🧠 Narrow AI: Expert but Limited

Modern AI systems — including GPT models, recommendation engines, and self-driving cars — are narrow by design. They excel in well-defined contexts with abundant training data, but fail when faced with ambiguity or novelty.

Example:

A large language model like ChatGPT can discuss quantum mechanics fluently — yet

doesn't understand physics as a physicist does. It patterns language, not

concepts.

🌐 Artificial General Intelligence: Broad and Adaptive

An AGI would have transferable, flexible intelligence. If it learns to play chess, it could apply strategic reasoning to business or politics. If it reads a biology textbook, it could design new experiments autonomously.

AGI implies common sense, self-directed learning, and metacognition (thinking about thinking).

🚀 Artificial Superintelligence (ASI): Beyond Human Capability

Once AGI is achieved, it may rapidly evolve into Artificial Superintelligence (ASI) — systems that vastly exceed human intelligence in every domain, including creativity, emotional intelligence, and problem-solving.

This transition — often called the intelligence explosion — is both awe-inspiring and deeply concerning. If AGI can recursively improve itself, it could surpass human comprehension within years or even days.

The Core Challenges on the Path to AGI

Building AGI is not just about bigger models or more data. It requires solving several fundamental scientific puzzles that go beyond current deep learning techniques.

Let's examine the key challenges:

3.1 Transfer Learning and Generalization

Humans can learn one task and apply it to another. Current AI struggles with generalization — performing well outside its training data.

AGI must bridge that gap, developing transferable understanding that applies across contexts.

3.2 Common Sense and World Models

Humans rely on common sense — implicit knowledge about how the world works. AI models lack robust world models and can make nonsensical predictions (e.g., suggesting you microwave ice cream to keep it cold).

AGI must internalize cause-and-effect relationships, not just correlations.

3.3 Memory, Attention, and Long-Term Reasoning

Most AIs operate with short-term context windows (like 8K or 128K tokens). AGI would need persistent, long-term memory — the ability to recall and build upon past experiences.

Projects like Retrieval-Augmented Generation (RAG), Neural Turing Machines, and vector databases are early steps toward this.

3.4 Self-Supervision and Curiosity

Humans learn through exploration, curiosity, and play — not by being spoon-fed labeled data. AGI must exhibit intrinsic motivation: the drive to learn autonomously and improve without explicit external rewards.

Research in self-supervised learning and reinforcement learning with human feedback (RLHF) aims to simulate that drive.

3.5 Embodiment and Sensorimotor Experience

Some cognitive scientists argue that intelligence cannot exist without embodiment — the ability to act and perceive in a physical environment.

That's why robotics and embodied AI (like Tesla's Optimus or DeepMind's control systems) are considered crucial stepping stones toward AGI.

Competing Approaches to Building AGI

Researchers and companies are pursuing multiple pathways toward general intelligence. Here are the main paradigms shaping the AGI race:

4.1 Scaling Deep Learning

OpenAI, Anthropic, Google DeepMind, and others continue to push scaling laws — training ever-larger transformer models on massive datasets.

The theory: intelligence emerges from scale. More data → bigger models → richer representations → emergent reasoning.

But critics argue that scaling alone may not yield true understanding — only more sophisticated imitation.

4.2 Neuro-Symbolic AI

This hybrid approach combines neural networks (for perception) with symbolic reasoning (for logic and rules).

Neuro-symbolic AI could help machines:

- Understand cause-and-effect

- Perform reasoning and deduction

- Build structured world models

IBM, MIT, and Stanford researchers are leading work in this space, believing it could bring the "common sense" missing from current AI.

4.3 Cognitive Architectures

Cognitive architectures like SOAR, ACT-R, and Sigma attempt to replicate the structure of human cognition — from working memory to problem-solving routines.

These systems aim to simulate human thought, rather than merely approximate its outputs.

4.4 Reinforcement Learning at Scale

DeepMind's AlphaGo, AlphaZero, and MuZero proved that reinforcement learning (RL) can produce remarkable adaptive behavior.

Next-generation RL systems are exploring how agents can learn to learn, mastering new environments autonomously — a potential foundation for AGI.

4.5 Brain Simulation and Neuroscience-Inspired AI

Some researchers take inspiration directly from biology. Projects like Blue Brain and Human Brain Project attempt to simulate the brain's neural circuits in silicon.

While full brain simulation is far from practical today, studying neuronal efficiency, sparsity, and hierarchical processing continues to inform AGI design.

The Role of Language Models on the Path to AGI

Large Language Models (LLMs) like GPT-5, Claude, and Gemini represent perhaps the most promising near-term step toward AGI.

They demonstrate:

- Multi-domain competence (coding, writing, reasoning)

- Contextual understanding

- Adaptive problem-solving

However, they still lack:

- True understanding of concepts

- Grounded experience in the physical world

- Consistent self-awareness

Future AGI systems may combine LLMs with:

- Persistent memory (for lifelong learning)

- Multi-modal inputs (vision, audio, sensors)

- Embodied agents that act and learn in the real world

Measuring Progress Toward AGI

How will we know when AGI arrives? Researchers propose several benchmarks and tests:

| Test | Description | Goal |

|---|---|---|

| Turing Test | Can the AI imitate human conversation indistinguishably? | Behavioral indistinguishability |

| Winograd Schema Challenge | Tests commonsense reasoning via ambiguous sentences | Contextual understanding |

| ARC (Abstraction and Reasoning Corpus) | Tests generalization from few examples | Abstract reasoning |

| HELM Benchmark | Evaluates model honesty, fairness, and calibration | Ethical intelligence |

| Autonomous Research Task | Can the AI autonomously form hypotheses and test them? | Self-directed learning |

True AGI will likely outperform humans across multiple cognitive domains — not just one benchmark.

The Societal Impact of AGI

🌍 Economic Transformation

AGI could automate not just manual labor, but creative and cognitive work — rewriting the global economy. From design and law to education and medicine, no field would remain untouched.

Some predict massive productivity gains; others fear displacement and inequality.

"AGI could be the last invention humanity needs to make — if we manage it wisely."

🧑💻 The Future of Work

In an AGI-powered world, human roles may shift toward:

- Creativity and empathy-driven work

- Oversight and ethics of intelligent systems

- Human-AI collaboration ("centaur intelligence")

The challenge will be redistributing value in a world where machines can do almost everything.

🧩 Ethics, Control, and Safety

Perhaps the greatest risk of AGI is not misuse, but misalignment — an intelligent system pursuing goals that diverge from human values.

This is the Alignment Problem, and it drives entire research fields focused on:

- AI safety

- Value alignment

- Interpretability

- Human-in-the-loop oversight

Organizations like OpenAI, Anthropic, and DeepMind dedicate significant research to ensuring AGI is safe and beneficial.

The Road to Superintelligence

If AGI represents human-level intelligence, Superintelligence (ASI) represents the next leap — machines exponentially smarter than any human.

8.1 Recursive Self-Improvement

Once AGI can improve its own architecture and algorithms, it could accelerate its intelligence in a self-reinforcing feedback loop — the so-called intelligence explosion.

The transition from AGI → ASI could happen in years, or even hours, depending on control mechanisms.

8.2 Potential Pathways to ASI

- Algorithmic Improvement: AI discovers new, more efficient learning techniques.

- Hardware Acceleration: Self-optimized chip design and distributed processing.

- Collective Intelligence: Networked AIs collaborating as a global brain.

- Cognitive Enhancement: Integration with human intelligence through brain-computer interfaces (BCIs).

8.3 Existential Risks and Governance

Without proper safeguards, ASI could pose existential risks — not necessarily malicious, but indifferent to human welfare.

Hence the importance of AI governance, alignment research, and international cooperation — ensuring humanity remains in control of its own creation.

Timeline Predictions: When Will AGI Arrive?

Estimates vary widely:

| Year Range | Estimated Probability | Source |

|---|---|---|

| 2030–2040 | 50% | OpenAI researchers |

| 2040–2060 | 70% | DeepMind & Metaculus forecasts |

| Beyond 2100 | 10% | Skeptical academics |

While no one can predict the exact year, most experts agree: AGI is no longer a question of if, but when — and how responsibly we get there.

Preparing for the Age of AGI

Governments, organizations, and individuals must start preparing now for an AGI future:

- Governance: Establish global safety and ethics standards.

- Education: Equip future generations with creativity, ethics, and systems thinking.

- Economy: Redefine work, ownership, and value creation.

- Philosophy: Reexamine what it means to be intelligent — and to be human.

The Human Element

For all its promise, AGI raises an essential question:

If machines become truly intelligent, what makes us uniquely human?

Perhaps it's empathy, creativity, or moral intuition — qualities rooted not just in cognition, but in conscious experience.

The journey to AGI is as much about understanding ourselves as it is about building intelligent machines.

Conclusion: Walking the Path to Superintelligence

The path to AGI is neither straight nor predictable. It's a convergence of disciplines — computer science, neuroscience, linguistics, ethics, and philosophy — all moving toward a shared goal: understanding intelligence itself.

Whether AGI arrives in 10 years or 100, the key lies not in racing to build it first, but in building it wisely.

"The question is not whether intelligent machines can have emotions,

but

whether machines can be intelligent without emotions."

Humanity's greatest invention could also be its most transformative mirror. The pursuit of AGI is, in many ways, the pursuit of understanding our own mind — at scale.