Last updated: 6 September, 2025

Machine learning (ML) has moved from research labs to real-world products — powering everything from recommendation engines and fraud detection to self-driving cars and predictive maintenance.

But deploying a machine learning model in production is not as simple as training one in a Jupyter notebook.

That's where MLOps comes in — a framework that applies the principles of DevOps to machine learning systems, bridging the gap between data science and engineering to deliver reliable, reproducible, and scalable AI systems.

This guide will walk you through what MLOps is, why it matters, and how to implement it step by step — from data preparation to deployment and monitoring.

What Is MLOps?

MLOps (Machine Learning Operations) is the discipline of managing the end-to-end lifecycle of machine learning models — from development to deployment and ongoing monitoring.

If DevOps focuses on continuous integration and delivery (CI/CD) for code, MLOps extends those principles to models, data, and experiments.

The Goal of MLOps

To build a system where:

- Data scientists can iterate on models quickly.

- Engineers can deploy models seamlessly to production.

- Operations teams can monitor and maintain performance at scale.

In short, MLOps helps turn "models that work on your laptop" into "models that work reliably in production."

Why MLOps Is Necessary

A common misconception in AI projects is that success ends with training a high-accuracy model. In reality, that's only about 20% of the work.

The real challenges begin after the model is deployed.

Common Pain Points Without MLOps

| Challenge | Description | Impact |

|---|---|---|

| Model Drift | Data distribution changes over time | Predictions degrade silently |

| Environment Mismatch | Local vs. production environments differ | Model breaks during deployment |

| Manual Deployment | Ad hoc scripts and manual updates | High risk of error |

| Lack of Version Control | No tracking of datasets, models, or experiments | Impossible to reproduce results |

| Slow Collaboration | Data scientists, engineers, and ops teams siloed | Longer delivery cycles |

MLOps solves these challenges by providing automation, reproducibility, and governance for every stage of the ML lifecycle.

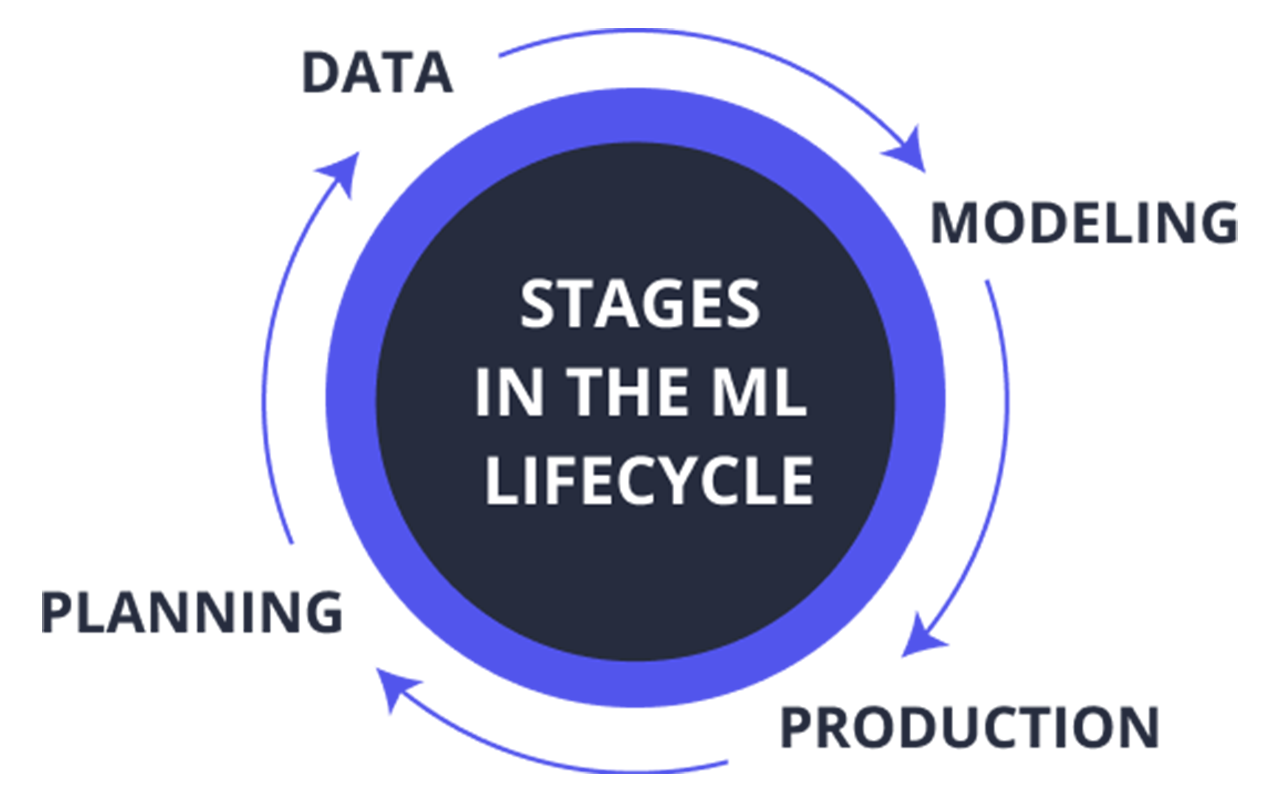

The Machine Learning Lifecycle — End to End

Before we dive into tooling, let's visualize the ML lifecycle that MLOps manages.

Data Collection → Data Preparation → Model Training → Model Validation → Deployment → Monitoring → Feedback Loop

Data Collection & Preparation

- Ingesting raw data from multiple sources (APIs, databases, sensors)

- Cleaning, labeling, and transforming data for training

- Versioning datasets to ensure reproducibility

Tools: Apache Airflow, Great Expectations, DVC (Data Version Control), Delta Lake

Model Training & Experimentation

- Building and testing different model architectures

- Hyperparameter tuning and cross-validation

- Logging experiments and results

Tools: MLflow, Weights & Biases, TensorBoard, Optuna

Model Validation

- Evaluating performance on unseen test sets

- Checking bias, fairness, and robustness

- Comparing performance across versions

Tools: Scikit-learn, Evidently AI, Deepchecks

Model Deployment

- Packaging the trained model (as Docker image or serialized file)

- Deploying to cloud, edge, or on-prem environments

- Supporting real-time or batch inference

Tools: AWS SageMaker, Azure ML, Kubeflow, TensorFlow Serving, BentoML

Monitoring & Maintenance

- Tracking performance drift, data quality, and latency

- Alerting when models degrade or inputs change

- Automating retraining or rollback

Tools: Prometheus, Grafana, WhyLabs, Arize AI, MLflow Monitoring

The Pillars of MLOps

To operationalize ML effectively, you need to think in terms of three main pillars: automation, collaboration, and governance.

Automation: From Manual to Continuous ML

In traditional ML workflows, data scientists manually retrain models and hand over artifacts for deployment. In MLOps, automation takes over — enabling Continuous Integration, Continuous Deployment, and Continuous Training (CI/CD/CT).

🔁 Continuous Integration (CI)

- Automate model testing and validation with each new dataset or code change.

- Run unit tests for data pipelines and model logic.

🚀 Continuous Deployment (CD)

- Automatically package and deploy models to production once validated.

- Use version-controlled pipelines to ensure consistency across environments.

🧠 Continuous Training (CT)

- Periodically retrain models when data drifts or performance drops.

- Trigger retraining automatically based on monitoring metrics.

Outcome: Faster experimentation, safer deployments, and reduced downtime.

Collaboration: Bridging Data Science and Engineering

One of the biggest failures in AI projects is poor collaboration between teams. MLOps fosters shared workflows, standardized tools, and clear ownership.

Shared Infrastructure

Data scientists and engineers operate on the same cloud or containerized environments.

Shared Metadata

Models, datasets, and experiments are tracked in central repositories (e.g., MLflow, DVC, or ModelDB).

Shared Culture

Ops teams monitor pipelines, while data scientists focus on improving models — both speaking the same operational language.

Governance: Trust, Compliance, and Reproducibility

Regulatory compliance and ethical considerations are vital in modern AI.

MLOps enables governance through:

- Dataset and model lineage tracking

- Versioned metadata for audits

- Reproducible experiments

- Bias and fairness checks

This ensures models meet both technical and ethical standards before deployment.

Building Your MLOps Pipeline: A Step-by-Step Framework

Let's walk through the essential steps to implement an MLOps pipeline for your organization.

Step 1: Data Versioning and Validation

Store and version all raw and processed data.

Recommended Tools:

- DVC (Data Version Control): Tracks datasets alongside code in Git.

- Great Expectations: Automates data validation to detect schema or quality issues.

Goal: Never lose track of which data produced which model.

Step 2: Automate Training Pipelines

Orchestrate your ML workflow using a pipeline engine.

Recommended Tools:

- Apache Airflow or Kubeflow Pipelines for scheduling and dependency management.

- MLflow for experiment tracking and artifact management.

Goal: Turn your ad hoc scripts into automated, reproducible workflows.

Step 3: Containerize and Deploy Models

Package your trained model into a container for easy deployment.

Recommended Tools:

- Docker + Kubernetes: Standard for scalable model serving.

- BentoML or TorchServe: Frameworks for API-based model deployment.

- SageMaker / Azure ML / Vertex AI: Cloud-native deployment and scaling.

Goal: Move from "works on my laptop" to "works in production."

Step 4: Set Up CI/CD Pipelines

Automate integration and deployment of new models.

Recommended Tools:

- GitHub Actions / GitLab CI / Jenkins: Trigger retraining and deployment on commit.

- Terraform / Helm: Manage infrastructure as code for reproducibility.

Goal: Reduce human error and speed up delivery cycles.

Step 5: Implement Monitoring and Feedback Loops

Measure real-world performance and detect data or concept drift.

Metrics to Track:

- Model accuracy and latency

- Input data drift

- Feature importance shift

- Infrastructure health

Recommended Tools:

- Prometheus + Grafana: Infrastructure and metrics dashboards

- WhyLabs / Arize / Evidently AI: Specialized ML monitoring platforms

Goal: Ensure models perform as intended and trigger retraining when necessary.

The MLOps Tech Stack — 2025 Edition

| Stage | Tools | Purpose |

|---|---|---|

| Data Management | DVC, Delta Lake, Feast | Versioning and feature store |

| Pipeline Orchestration | Airflow, Kubeflow, Prefect | Automate ML workflows |

| Experiment Tracking | MLflow, Weights & Biases | Manage experiments and results |

| Model Deployment | BentoML, TensorFlow Serving, Seldon Core | Serve models in production |

| Monitoring & Logging | Prometheus, Arize, WhyLabs | Track model drift and uptime |

| Infrastructure | Docker, Kubernetes, Terraform | Containerization and IaC |

Each layer integrates to create a feedback-driven, automated ecosystem that supports continuous improvement.

MLOps in the Cloud: AWS, Azure, and GCP

Cloud platforms now offer native MLOps solutions that abstract much of the complexity.

🟡 AWS SageMaker

- Built-in model registry, pipeline automation, and deployment

- Integration with CloudWatch for monitoring

🔵 Azure Machine Learning

- Drag-and-drop pipeline builder

- Responsible AI toolkit for bias and interpretability checks

🔴 Google Vertex AI

- Unified environment for training, deployment, and monitoring

- AutoML and pipeline integration with TensorFlow Extended (TFX)

Choosing a platform depends on your existing cloud infrastructure and compliance requirements.

Case Study: MLOps in Action

🎯 Problem:

A retail company's recommendation model degraded over time due to changing product data and customer behavior. Manual retraining caused downtime and inconsistent results.

🧩 Solution:

They adopted an MLOps pipeline using:

- Airflow for pipeline orchestration

- MLflow for experiment tracking

- Docker + Kubernetes for deployment

- Prometheus for monitoring

🚀 Results:

- Model retraining time reduced from 3 days to 3 hours

- 25% increase in recommendation accuracy

- Seamless CI/CD workflow across teams

This is the power of automation and reproducibility in machine learning.

Best Practices for Successful MLOps Adoption

- Start Small: Automate one stage (e.g., deployment) before the entire lifecycle.

- Embrace Version Control: Treat data and models like code.

- Monitor Everything: Data drift, latency, bias — all matter.

- Integrate Early with DevOps: Align processes with existing CI/CD pipelines.

- Encourage Collaboration: Data scientists, engineers, and ops must work as one team.

- Design for Scalability: Use containerization and cloud-native services.

- Invest in Explainability: Regulators and users need transparency.

The Future of MLOps

MLOps continues to evolve as AI adoption accelerates.

Emerging Trends:

- AutoMLOps: Fully automated pipelines for model training and deployment.

- Edge MLOps: Managing models deployed on IoT and edge devices.

- LLMOps: Extending MLOps principles to large language models (LLMs).

- Hybrid Cloud MLOps: Federated learning and on-prem + cloud integration.

- Responsible MLOps: Integrating fairness, accountability, and compliance by design.

As AI systems become more complex, MLOps will be the foundation ensuring their reliability, safety, and scalability.

Conclusion: Turning ML Chaos into ML Confidence

Building machine learning models is hard — but maintaining them in production is even harder.

MLOps transforms that chaos into confidence by creating a structured, automated, and auditable process for managing the ML lifecycle.

It's not just about tools or pipelines — it's a culture of collaboration, iteration, and accountability.

Whether you're an AI startup or a Fortune 500 enterprise, adopting MLOps is no longer optional — it's the only way to scale AI responsibly and efficiently.

"The success of AI in production doesn't depend on the smartest model — it depends on the smartest process."